| Data Mining and Enterprise Software (2003-2010) |

|

From 2003 to 2010, I worked for Fair Isaac Corporation aka FICO, last as Senior Research Scientist Lead. If I had any doubts, the tenure at FICO was enough to convince me of the importance and primacy of data in tomorrow's world. That tomorrow is now today! The core business of Fair Isaac remains generating predictive models and optimal strategies to maximize profit (or minimize loss) given likelihoods of risks of all sorts: fraud, delinquency, attrition, switching and more. Financial corporations were the largest clients, but there were also significant operations in marketing operations, analytic consulting and a research arm. With background in Statistical modeling and machine learning, I learned a lot by working with seasoned practitioners of either field. The combination of Statistics and Computer science was rather uncommon then, but has become more common since. An indicator of the relative lack of immaturity of these fields is the appearance of textbooks such as this one by Kevin Murphy which is impressive in its wide coverage and will improve further in the 3rd edition. |

| Machine Learning, Artificial Intelligence, Knowledge Discovery |

|

By the time I left FICO, I managed two teams of analytic scientists. All that meant was that I had simply aged. In the outside world, the most popular black-box machine learning algorithm changed to Support Vector Machines (SVM) from Neural Networks (ANN) that I knew from my years at college. As of 2013, there is great interest in Deep learning, which illustrates how technology evolves cyclically, but like a true non-linear system, does not quite replicate its previous tours. Certainly there was much progress in the area of Statistical classification with huge amounts of data. What I enjoyed a lot was being an early contributor and later steward of the internal wiki on data munging, predictive modeling and risk management to operate more efficiently in a global and distributed team environment. One interesting project I remember (because it was novel to me at that time), was to use a machine learning algorithm such as Reinforcement learning to optimize the next best action; which we applied to customers that missed a payment. We did most of our work on Linux and Unix boxen using gvim or emacs are our editors. We did use SAS for some projects, though it was generally discouraged. Most of internal modeling work was performed through the utilities written by scientists in C; and later in C++ and Java. For scripting, the preference was for Perl, with momentum building in favor of Python. |

| Transaction Processing, Text Mining, Time Series and Behavioral Modeling |

|

A good part of my role was to envision and support the natural evolution of the product, such as building newer models, exploiting newer feeds or simply considering more classes of variables that could be derived either from a record or a stream of records. The other part was to prototype product plug-ins that provided additional capability and ability, and demonstrating their value in PoC engagements or to internal stakeholders. As part of that, we built a library of user preferences by deciphering patterns using temporal order as well as brief textual information such as merchant name. Due to irregular sampling, this is the closest approach to signal processing possible with time series data. Practitioners of Natural Language Processing (NLP) know that careful string comparisons work quite well with lower effort. Without a lot of context, the power of NLP cannot be unleashed. I developed the framework and techniques to assess customer loyalty to commoditized products, quantify a customer's belongingness or membership to different classes, trigger-based marketing and to process textual data intelligently using linguistic and data-driven techniques. |

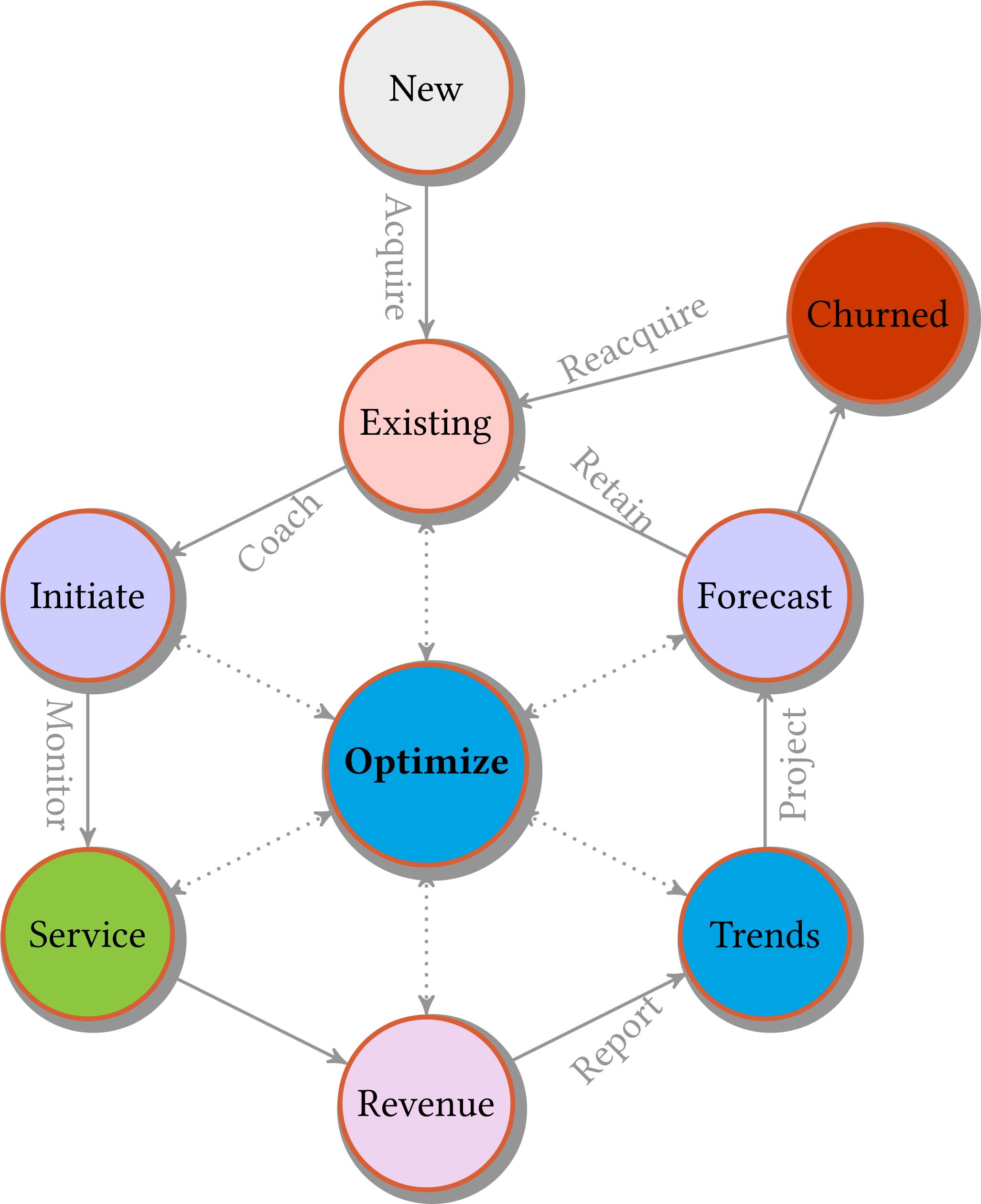

| Customer Lifecycle Management |

A complete CRM software has been talked about for a long time. End-to-end customer management platforms exist for specific industries such as retail banking. The general concepts, however, are applicable to other domains like search advertising or direct marketing. A simple CRM cycle is shown to the left. We start from the node market Existing, which contains all active customers at a certain point in time. The ideal next step could be to coach the customer through the early phase during Initiation. After that, and in general, there is a cost to serve for all customers, active or not. The next step is the Revenue generated. It is typical to monitor the trends and Forecast the spend for the near term. The forecast often probabilistically indicates which customers are more likely to churn or stop spending. Some of those advertisers are good candidates for reacquiring. Every transition in the cycle is different for different types of customers. High spenders are less like to stop spending, on an average, for example. To invest the optimal amount in servicing an account or giving discounts of sorts is the fundamental question for many businesses. Is it better to invest in reacquiring high spenders or in acquiring newer customers? General slice of population or business of a certain size? After that, one gets into the finer points -- if there are multiple promotions or offers, how to match offers with customers to maximize short-term revenue as well as longer-term value or Customer Lifetime value? For the payment card industry, both Churn and Revenue are risks to be managed, since a customer might stop spending or not return the borrowed amount. The Initiate stage conerns itself with the original line of credit, which might be lower and more conservative for various reasons, including general economic volatility. The Revenue node is related to the interchange fees, transaction-level cut of spend and the interest rate paid by the customer. The Trends stage relates simply to the ongoing, revised risk of default: whether to give a credit limit increase or not? The Forecast part is perhaps more applicable to customers that have already missed at least one payment. Depending on the portfolio 60 to 90% of those customers become current again, so the risk is not yet catastrophic. What offer should be provided first? Should the creditor offer to take 90% of the total due and call it a day? Should the contact be through phone or email? A lot of that is dictated by the federal and state policy, but there is room to optimize. In my roles at FICO, we built predictive models for all nodes except the Initiation or rather Origination as it is known. Typical acquisition falls under the purview of marketing, but not always. The business might be very technical and complex or have too many regulations, in which case the role lies halfway between development/analytics and marketing. |

| Product Management & Analytical Software |

|

For our internal analytic software development team, I was a practitioner and power user who could build out use cases and serve as the subject matter expert. I served on multiple focus groups for FICO's flagship product for building models -- Model Builder (TM) on areas covering algorithms for linear, logistic and generalized regression; and Artificial Neural Networks (ANN); data processing steps such as: data segmentation, summarization, outlier detection; modeling steps such as binning, variable creation, model building, variable selection, model validation, model calibration and reporting. Earlier on in my career at FICO, I automated, streamlined and improved processes, mainly because I am lazy as in Larry Wall's dictionary. In today's world automation is a double-edged sword, some might say: however, enabling a larger team trumps individual interests any day. As one such example, I created a fully functional stand-alone Java application that encapsulated credit scoring models. The goal was to sell such solutions to smaller banks who did not want to install a specific CRM tool such as TRIAD (TM). |

| Data Mining Contest 2009 |

|

I was the organizer of the worldwide FICO UCSD Data Mining Contest for 2009, along with Prof. Charles Elkan. The contest was launched on May 15th, 2009 and closed on July 15th, 2009. The contest was a big success with 301 teams from 35 countries. Only one of the twelve prizes went to a student at a US university, reflecting the global nature of the contest. There were two problems: the first one easy, the second one hard. For each problem we had three prizes for undergraduate students and three for graduate students or post-docs. 5 out of 6 prizes for the undergraduate contest went to students from RWTH Aachen University, Germany. In the hard, the problem had been designed in such a way that viewing the transaction data as a time series would reveal additional informative patterns. Further, the data set was tweaked to reward link analysis or train models repeatedly. Indeed, the some winners volunteered their use of Random forests as the critical component. Insights from the contest were not published for such are the necessary trade-offs between academia and industry. |

| Marketing Analytics, Store Placement, Customer Lifecycle Management |

|

I also worked in Marketing Analytics area. Earlier on I did the run-of-the-mill work as in customer propensity to respond to direct marketing offers, subscription renewal and customer segmentation. Some readers might remember their days in college with only two constant items in mail: an AOL CD and a catalog from Fingerhut. Many of my colleagues in Marketing Analytics came from Fingerhut. One project, however, was quite fascinating where we showed that using the transaction-level data of existing customer base, coupled with demographic information results in powerful and truly new information or insights. For example, where the retail store is located in terms of zip code is not as predictive as the zip code the customers live in (and drive from). These insights were only possible because we combined historical behavioral information with demographics and macroeconomic information via Census, and stressing the distance people will drive to for a value proposition that is attractive to them or perhaps the only choice available. Though this project was done in 2004-2005, the similarity to social networks and inventory optimization is quite clear. |

| Bridge from R&D to Sales |

|

On some occasions I was the Research scientist or engineer (anyone not in sales is an engineer!) who could talk to senior bankers and explain strategy and math in simple language. Due to resource shortage, my team filled in the gap between what Development will build and not support. We helped client partners (aka account managers and sales people) make business cases for upselling and upgrading existing software solutions as well as doing Proofs of Concept for potential sales. Of course, things slowed down a fair bit during the 2007-2010 Financial Crisis. |

| Lagniappe |

|

I worked with very talented and capable colleagues at FICO. An advantage was having a Toastmasters club on premises, Hi-FI speakers, where I served a year as the VP of Education and earned Advanced Communicator Bronze (ACB) and Advanced Leader Bronze (ALB) along the way. If you are interested in my work, you may contact me here |

| (c) Copyright 2013 Sandeep Rajput. All Rights Reserved. |

![Here's a Nickle for you, kid [Dilbert 21021.strip from dilbert.com]](../img/heres_a_nickle_unix_dilbert.gif)

![Eunuch Programmers [Dilbert 26446.strip from dilbert.com]](../img/unix_vs_eunuchs_dilbert.gif)